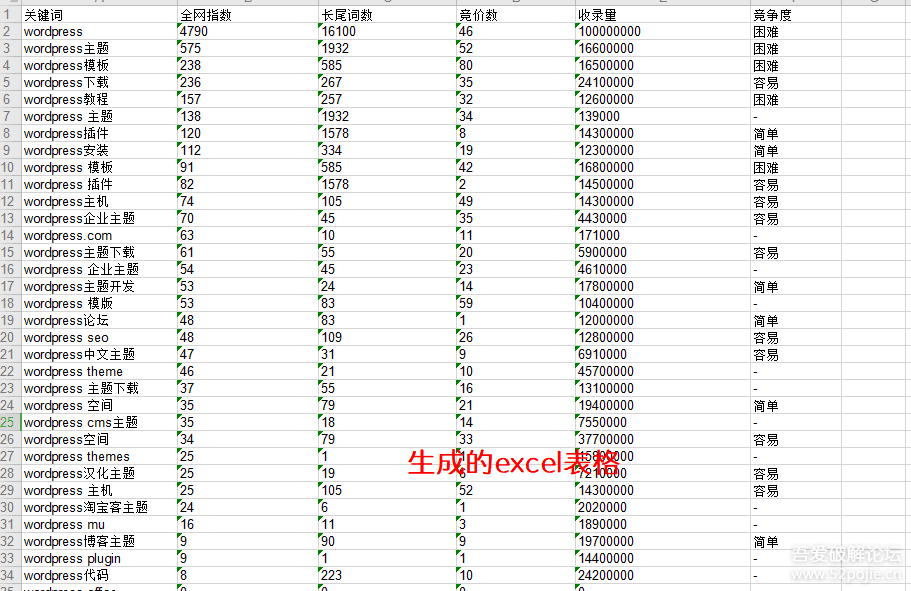

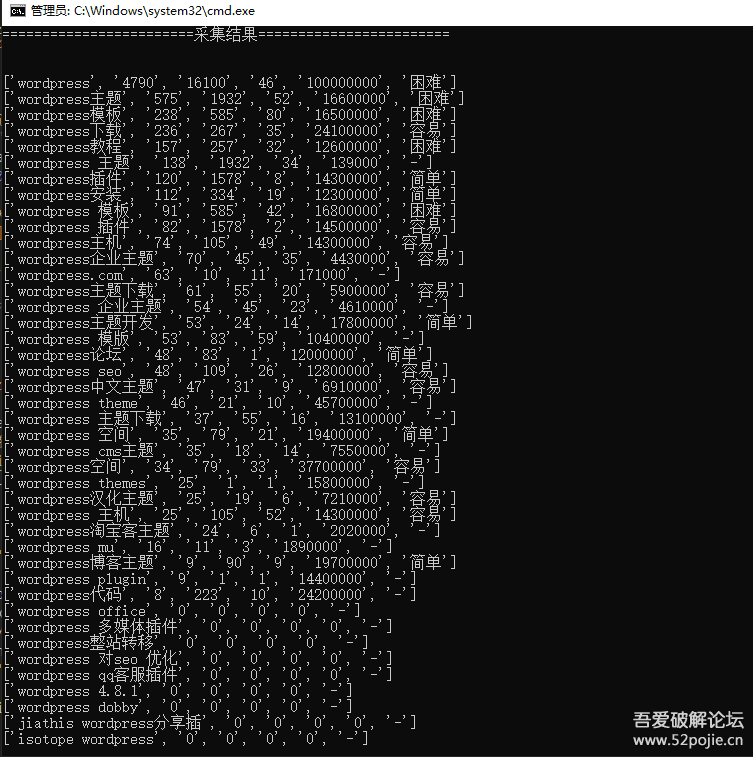

虽然小雨做站很佛系,但是偶尔也想讨好那些蜘蛛,所有对解放双手的脚本、工具,今天分享一个用python3批量采集网站关键词到excel表的办法,这是小雨首发在52破解论坛上的

#站长工具长尾关键词挖掘

# -*- coding=utf-8 -*-

import requests

from lxml import etree

import re

import xlwt

import time

headers = {

'Cookie':'UM_distinctid=17153613a4caa7-0273e441439357-36664c08-1fa400-17153613a4d1002; CNZZDATA433095=cnzz_eid%3D1292662866-1594363276-%26ntime%3D1594363276; CNZZDATA5082706=cnzz_eid%3D1054283919-1594364025-%26ntime%3D1594364025; qHistory=aHR0cDovL3N0b29sLmNoaW5hei5jb20vYmFpZHUvd29yZHMuYXNweCvnmb7luqblhbPplK7or43mjJbmjph8aHR0cDovL3Rvb2wuY2hpbmF6LmNvbS90b29scy91cmxlbmNvZGUuYXNweF9VcmxFbmNvZGXnvJbnoIEv6Kej56CB',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

'Accept':'Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3',

"Connection": "keep-alive",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.9"

}

#查询关键词是否能找到相关的关键字

def search_keyword(keyword):

data={

'kw': keyword,

}

url="http://stool.chinaz.com/baidu/words.aspx"

html=requests.post(url,data=data,headers=headers).text

# print(html)

con=etree.HTML(html)

key_result=con.xpath('//*[@id="pagedivid"]/li/div/text()')

try:

if(key_result[0] == "抱歉,未找到相关的数据。") :

print('\n')

print("没有找到相关长尾词,请换一个再试。")

return False

except:

return True

#获取关键词页码数和记录条数

def get_page_number(keyword):

data = {

'kw': keyword,

}

url = "http://stool.chinaz.com/baidu/words.aspx"

html = requests.post(url, data=data, headers=headers).text

# print(html)

con = etree.HTML(html)

page_num = con.xpath('//*[@id="pagedivid"]/div/ul/li[7]/text()')

page_numberze = r'共(.+?)页'

page_number = re.findall(page_numberze, page_num[0], re.S)

page_number = page_number[0]

# print(page_number)

print('准备开始挖掘关键词'+ keyword +'的长尾关键词')

return page_number

# return 1 #测试用,请返回1。。

#获取关键词数据

def get_keyword_datas(keyword,page_number):

datas_list = []

for i in range(1,page_number+1):

print(f'正在采集第{i}页关键词挖掘数据...')

print('\n')

url = "https://data.chinaz.com/keyword/allindex/{0}/{1}".format(keyword,i)

html = requests.get(url,headers=headers).text

# print(html)

con = etree.HTML(html)

key_words = con.xpath('//*[@id="pagedivid"]/ul/li[2]/a/text()') # 关键词

# print(key_words)

overall_indexs = con.xpath('//*[@id="pagedivid"]/ul/li[3]/a/text()') # 全网指数

chagnwei_indexs = con.xpath('//*[@id="pagedivid"]/ul/li[4]/a/text()') # 长尾词数

jingjias = con.xpath('//*[@id="pagedivid"]/ul/li[5]/a/text()') # 竞价数

collections = con.xpath('//*[@id="pagedivid"]/ul/li[9]/text()') # 收录量 //*[@id="pagedivid"]/ul[3]/li[9]

jingzhengs= con.xpath('//*[@id="pagedivid"]/ul/li[11]/text()') # 竞争度 //*[@id="pagedivid"]/ul[3]/li[11]

data_list = []

for key_word, overall_index, chagnwei_index, jingjia, collection, jingzheng in zip(key_words, overall_indexs, chagnwei_indexs, jingjias, collections, jingzhengs):

data = [

key_word,

overall_index,

chagnwei_index,

jingjia,

collection,

jingzheng,

]

print(data)

data_list.append(data)

datas_list.extend(data_list) #合并关键词数据

print('\n')

time.sleep(3)

return datas_list

#保存关键词数据为excel格式

def bcsj(keyword,data):

workbook = xlwt.Workbook(encoding='utf-8')

booksheet = workbook.add_sheet('Sheet 1', cell_overwrite_ok=True)

title = [['关键词', '全网指数', '长尾词数', '竞价数', '收录量', '竞争度']]

title.extend(data)

#print(title)

for i, row in enumerate(title):

for j, col in enumerate(row):

booksheet.write(i, j, col)

workbook.save(f'{keyword}.xls')

print(f"保存数据为 {keyword}.xls 成功!")

if __name__ == '__main__':

keyword = input('请输入关键词>>')

print('正在查询,请稍后...')

result=search_keyword(keyword)

if result:

page_number=get_page_number(keyword)

print('一共找到' + page_number + '页')

input_page_num = input('请输入你想采集的页数>>')

if int(input_page_num) > int(page_number):

page_number = int(page_number)

else:

page_number = int(input_page_num)

print('\n')

print('正在采集关键词挖掘数据,请稍后...')

print('\n')

datas_list=get_keyword_datas(keyword,page_number)

print('\n========================采集结束========================\n')

bcsj(keyword, datas_list)